Navigating The JSON Interoperability Maze

JSON interoperability vulnerabilities expose a hidden risk in data exchange using JSON. These vulnerabilities arise because different JSON parsers, the software that interprets JSON data within applications, can have subtle variations in their interpretation.

Overview

In this blog, we are going to discuss what JSON is, what JSON parsers are, how they work, various components of parsers, how JSON interoperability vulnerabilities arise, how they work, and mitigation techniques. As JSON interoperability is an exclusive topic, we have covered all the major and minor attacking vectors and techniques to defend against them.

JSON (JavaScript Object Notation) is a lightweight data format commonly used for data exchange between applications, and "APIs.JSON" parsers translate JSON data into usable objects within a specific programming language.

Different parsers can have subtle variations in their interpretation of JSON documents because the JSON specification itself allows for some flexibility in how certain aspects are handled.

JSON parsers are essential for working with JSON data in various programming languages. They take a JSON string as input and convert it into a structured representation that the programming language can understand.

Component of JSON Parsers:

- Lexer: This function chunks the JSON string into basic building blocks (tokens like keys, values, commas, and colons).

- Parser: Analyze the token stream based on JSON grammar rules, building a data structure that reflects the data's hierarchy

- Error Handling: Identifies and reports invalid JSON syntax for debugging and error management.

What Does a JSON parser look like?

JavaScript (Built-in JSON.parse function)

const jsonString = '{"name": "Alice", "age": 30}';

const jsonObject = JSON.parse(jsonString);

console.log(jsonObject.name); // Output: Alice

console.log(jsonObject.age); // Output: 30

JSON interoperability vulnerabilities

JSON interoperability vulnerabilities expose a hidden risk in data exchange using JSON. These vulnerabilities arise because different JSON parsers, the software that interprets JSON data within applications, can have subtle variations in their interpretation.

These variations can lead to inconsistencies when different parsers handle the same JSON document. this can manifest unexpectedly, like overwriting different values for duplicate keys or misinterpreting case-sensitive key names.

In critical scenarios, these inconsistencies can be exploited by attackers to manipulate data or even bypass security checks.

Types of JSON interoperability vulnerabilities:

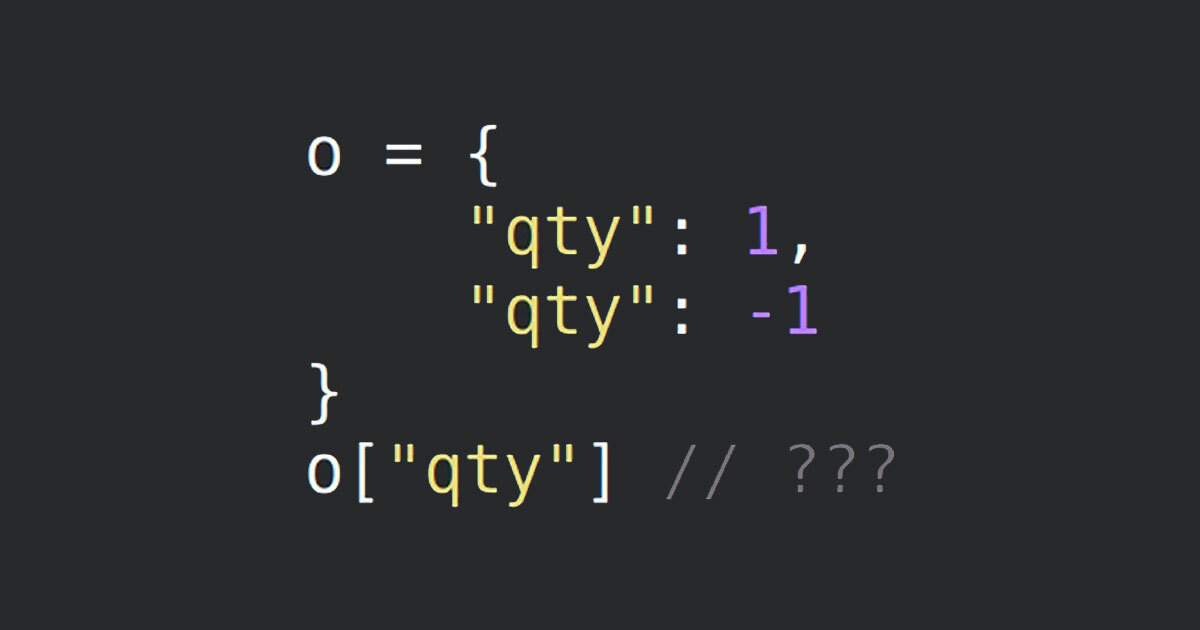

1. Inconsistent Duplicate Key Precedence

2. Key Collision: Character Truncation and Comments

3. JSON Serialization Quirks

4. Float and Integer Representation

5. Permissive Parsing and Other Bugs

Let's briefly examine each one to understand how it works and how to mitigate the risk of occurrence for each vulnerability.

1. Inconsistent Duplicate Key Precedence

Different JSON parsers may handle duplicate keys (keys appearing multiple times) inconsistently. Some parsers might overwrite the first occurrence with subsequent values, while others might preserve the last value or even throw errors.

This inconsistency can lead to unexpected behaviour in applications that rely on specific key-value associations. Malicious actors could potentially exploit this to inject data or manipulate values.

Example:

{

"name": "John Doe",

"age": 30,

"roles": ["admin", "user"], // Duplicate key

"roles": "editor" // This value might overwrite or be ignored depending on the parser

}

It has the key "roles" appearing twice with different values:

"roles": ["admin", "user"]

"roles": "editor"The problem arises because different JSON parsers might handle these duplicate keys in various ways

Such as, it may Overwrite the first occurrence, parsers might ignore the second occurrence “roles”, stricter parsers might throw an error when encountering duplicate keys

Mitigations

- Schema Says What's Up: Define a schema for your JSON data, including how to handle duplicate keys (overwrite, ignore, error).

- Validate Before You Integrate: Validate JSON against the schema to ensure it follows the rules, including duplicate key handling.

- Parser Choice Matters: Pick a JSON parser known for consistent duplicate key behaviour (error, overwrite, etc.).

- Standardize for Smooth Flow: If you control both sending and receiving JSON data, aim for consistency to minimize surprises

2. Key Collision: Character Truncation and Comments

JSON parsers may not handle certain characters or comments consistently, potentially leading to key collisions (where different keys appear the same due to truncation or comment stripping).

Attackers could exploit this by crafting JSON data that contains keys that appear identical to legitimate ones but have subtle differences (e.g., using special characters or comments). These "collided" keys might bypass validation or access unauthorized data.

Example:

{

"user_id": 1234, // Legitimate key

"user\nid": 1337 // Attacker-crafted key with a newline character, might collide with "user_id"

}- Newline Character Deception: The key "user\nid" contains a newline character (\n) embedded within it.

- Parser Misinterpretation: Depending on the JSON parser used, this newline character might be ignored or treated as a line break.

- Collision: If the parser ignores the newline, it might interpret "user\nid" as "user_id". This would overwrite the legitimate user_id value (1234) with the attacker's value (1337).

Mitigation

- Scrub User Input: Cleanse user-provided JSON to remove risky characters.

- Encode with Consistency: Use UTF-8 encoding across systems to avoid interpretation issues.

- Clear Key Names: Ditch ambiguous key names with subtle character differences.

- Limit Comments (Optional): Restrict comments if not crucial to minimize hiding places for attackers.

3. JSON Serialization Quirksconverting

JSON serialization libraries from different vendors might have subtle differences when converting data structures to JSON strings. These quirks can lead to interoperability issues when different parsers encounter serialized data.

These inconsistencies could potentially be exploited to inject data or manipulate values. However, the specific risk depends on the nature of the quirk.

Example: Imagine you have an object containing a custom class instance and a circular reference:

Potential issues with JSON serialization

class MyClass {

constructor(value) {

this.value = value;

}

}

const myObject = {

data: "This is some data",

customValue: new MyClass(42),

circular: {

self: myObject // Circular reference

}

}Custom Class Serialization: JavaScript's built-in JSON.stringify might not know how to handle the MyClass instance properly. It might only serialize its constructor name or simply ignore it.

Circular References: Circular references can lead to infinite loops during serialization, causing errors or incomplete JSON output.

Mitigations:

- Pick Strong Libraries: Choose established JSON serialization libraries that handle custom stuff and loops (circular references).

- Custom Serialize: If your data is unique, define a custom way to convert it to JSON format.

- Version Control Matters: Stick to the same JSON library version across your systems to avoid surprises.

- Test Your Contracts: Consider data contract testing to guarantee consistent data exchange across platforms.

4. Float and Integer Representation

Different programming languages and platforms might represent floating-point numbers (decimals) with varying degrees of precision. This can lead to inconsistencies when serializing and deserializing JSON data containing floats. Similarly, integers might be represented differently (e.g., signed vs. unsigned).

In rare cases, these discrepancies could potentially be exploited for denial-of-service attacks or other security vulnerabilities. However, the likelihood depends on the specific application and data involved.

Example: Imagine you have a function that calculates a discount based on a percentage

function calculateDiscount(price, discountPercentage) {

const discountAmount = price * discountPercentage;

const discountedPrice = price - discountAmount;

return discountedPrice; }

const productPrice = 10.99;

const discount = 0.1; // Intended to be 10% discount

const finalPrice = calculateDiscount(productPrice, discount);

console.log(finalPrice); // Might not be exactly 9.89 due to precision issues

Floating-Point Precision: JavaScript uses floating-point numbers to represent decimals. These numbers have limited precision, meaning they might not be able to store exact values like 0.1 perfectly.

Integer Representation: Different systems might have varying sizes and signed/unsigned behaviour for integers. For example, a system with 32-bit signed integers might represent the discount percentage (0.1) differently than a system with 64-bit floating-point numbers.

Mitigations:

- Know Your System's Limits: Understand precision needs for numbers in your app and any data partners.

- Strings for Exactness: If super precise numbers matter, use strings instead of numbers in JSON.

- Map Data Types Clearly: Define how number types translate between your app and other systems using JSON.

- Library Precision Check: Explore JSON libraries with features for controlling number precision.

5. Permissive Parsing and Other Bugs

JSON parsers may be overly permissive in accepting invalid or malformed JSON data. If the parser doesn't handle errors gracefully, this can lead to unexpected behaviour or potential security vulnerabilities.

Malicious actors could craft malformed JSON data that exploits parser bugs or permissive handling to inject code, bypass validation, or cause crashes

Permissive JSON parsers can be a security hazard, but they're not the only potential issue.

Mitigations:

- Strict Parsing: Choose JSON parsers known for strictness to avoid them interpreting malicious code or bypassing validation.

- Sanitize Input: Cleanse user-provided JSON data before parsing to remove potentially risky characters or code.

- Validate Your Data: Enforce data validation to ensure the JSON structure adheres to your expectations and doesn't contain vulnerabilities.

- Stay Updated: Keep your JSON libraries and parsers up-to-date to benefit from bug fixes and security patches.

Conclusion

We've delved deep into JSON interoperability attacks, from understanding how different parsers handle data to uncovering the sneaky vulnerabilities that can hide within.

Treating JSON with respect is essential. By knowing how JSON works under the hood and being aware of potential pitfalls, you can build applications that are both robust and secure. Remember, it's not just about getting data from A to B; it's about ensuring the journey is safe and smooth.

So, next time you work with JSON, consider the bigger picture. A little extra care now can save you from major headaches later.

References and Inspirations